Exam 19 December 2011, questions PDF

| Title | Exam 19 December 2011, questions |

|---|---|

| Author | Andreas Piculell |

| Course | Introduktion til machine learning og data mining |

| Institution | Danmarks Tekniske Universitet |

| Pages | 11 |

| File Size | 410.4 KB |

| File Type | |

| Total Downloads | 37 |

| Total Views | 148 |

Summary

Download Exam 19 December 2011, questions PDF

Description

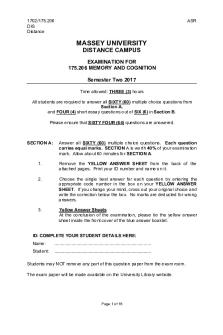

Technical University of Denmark Written examination: 19 December 2011, 9-13. Course name: Introduction to machine learning and data modeling. Course number: 02450. Aids allowed: All aids permitted. Exam duration: 4 hours. Weighting: The individual questions are weighted equally.

The exam is multiple choice. All questions have four possible answers marked by the letters A, B, C, and D as well as the answer “Don’t know” marked by the letter E. Correct answer gives 3 points, wrong answer gives -1 point, and “Don’t know” (E) gives 0 points. The individual questions are answered by filling in the answer fields in the table below with one of the letters A, B, C, D, or E. Notice, in some questions you are asked to identify the correct statement and in other questions the incorrect statement. This is indicated in the text of each question. Please write your name and student number clearly and hand in the present page (page 1) as your answer of the written test. Other pages will not be considered.

Answers: 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

Name: Student number:

HAND IN THIS PAGE ONLY 1 of 11

No.

Attribute description

Abbrev.

x1 x2 x3 x4 x5 x6

Age of Mother in Whole Years Mothers Weight in Pounds Race (1 = Other, 0 = White) History of Hypertension (1 = Yes, 0 = No) Uterine Irritability (1 = Yes, 0 = No) Number of Physician Visits First Trimester

Age MW Race HT UI PV

y

Birth Weight in Kilo Grams

BW

A. The first three principal component account for more than 90% of the variation in the data.

Table 1: Attributes in a study on risk factors associated with giving birth to a low birth weight (less than 2.5 kg) baby [Hosmer and Lemeshow, Applied Logistic Regression, 1989]. The data we consider contains 189 observations, 6 input attributes x1 –x6 , and one output variable y.

Question 1. Consider the data set described in Table 1. Which statement about the attributes in the data set is correct ? A. Race, HT and UI are ordinal.

D. MW is discrete whereas PV is continuous.

D. Since the data is standardized we do not need to subtract the mean when performing the PCA but can directly carry out the singular value decomposition on the standardized data.

A. When carrying out the cross-validation the same test set can be used to select attributes and estimate the generalization error of the linear regression model.

E. Don’t know.

Question 2. Consider the data set described in Table 1. Each attribute in the data set is standardized, and we carry out a principal component analysis (PCA) on the standardized input data, x1 –x6 . The singular values obtained are: σ1 = 17.0, σ2 = 15.2, σ3 = 13.1, σ4 = 13.0, σ5 = 11.8, σ6 = 11.3. The first and second principal component directions are:

v1 =

C. Relatively young, heavy mothers that are not white and have a history of hypertension but infrequently goes to the physician and do not have a uterine irritability will have a positive projection onto the second principal component.

Question 3. Consider the data set in Table 1. We would like to predict the birth weight y based on the six attributes x1 –x6 . In order to do so we fit a linear regression model with Forward selection as well as a linear regression with Backward selection using five-fold cross-validation. Which of the following statements is correct?

C. Age is continuous and ratio.

0.5238 0.5237 −0.3491 0.1981 −0.3369 0.4204

B. Relatively heavy, old and white mothers that frequently goes to the physician and have a history of hypertension but do not have uterine irritability will have a positive projection onto the first principal component.

E. Don’t know.

B. Age and PV are ratio.

Which one of the following statements is incorrect?

,

v2 =

−0.2948 0.3452 0.3584 0.6808 −0.3049 −0.3302

.

B. Five fold cross-validation gives a more precise estimate of the generalization error than leave-oneout cross-validation. C. Hold out is always better than five fold crossvalidation as the same data observations are not used both for training and for testing. D. In five fold cross-validation each observation is part of the test set once and part of the training set four times. E. Don’t know.

2 of 11

Taking into account that all attributes (except the output, y = BW ) are standardized, which of the following statements is incorrect? A. The mean birth weight of the babies is 2.94 kg. B. White women in general give birth to heavier babies than women that are not categorized as white. C. Hypertension and the presence of uterine irritability seem to lower the birth weight of babies. Figure 1: Variables selected in each of five crossvalidation runs using Forward selection (left panel) and Backward selection (right panel). Green squares indicate attributes that were selected and white squares that attributes were not included in the model in each cross-validation fold.

D. If we included the attribute PV in the model this would not change the values of the currently estimated parameters of the model since the input data is standardized. E. Don’t know.

Question 4. A linear regression model has been fitted to the data in Table 1 using five-fold crossvalidated Forward and Backward selection. Figure 1 shows the results in terms of which attributes were selected in each cross-validation fold. Which of the following statements is correct? A. The optimal attributes will always be selected by either Forward or Backward selection. B. Forward selection will always give better results than Backward selection as only the most important attributes are selected in each iteration. C. Backward selection is in general more computationally efficient than Forward selection. D. The training error performance on the same training data will always decrease as we select more attributes in the model by Forward selection. E. Don’t know. Question 5. A linear regression model has been fitted to the data set in Table 1 where the attributes Age and PV have been removed since they were neither selected by Forward nor Backward selection. Furthermore, they are correlated with the remaining attributes. The fitted model, f (x), is given by f (x) = 2.94 + 0.13x2 − 0.14x3 − 0.15x4 − 0.20x5 3 of 11

Consider the data in Table 2. Question 6. Ten mothers, denoted m1 , m2 , . . . , m10 , Question 7. have been randomly selected from the data set de- What is the confidence of the association rule scribed in Table 1. We here consider only the attributes {BWL } ← {M WL , H TN }? M W , H T , UI, BW , and the continuous attribute A. 5/10 M W has been binarized in terms of the attribute’s values being above or below the median value of all the B. 5/7 189 observations. BWL and BWH denotes a low birth C. 7/10 weight baby and a high birth weight baby respectively. The binarized data is given in Table 2. What are all D. 9/10 the frequent itemsets with support greater than 45%? E. Don’t know. A. {M WL }, {H TN }, {UIN },{BWL }. B. {M WL }, {HTN }, {U IN },{BWL },{M WL , H TN }, {M WL , UIN }, {M WL ,BWL }, {H TN , U IN }, {HTN ,BWL }, C. {M WL }, {HTN }, {U IN }, {BWL }, {M WL , HTN }, {M WL , U IN }, {M WL ,BWL }, {HTN , U IN }, {H TN ,BWL }, {M WL , H TN , U IN }.

Question 8. Consider the data set in Table 2. A mother who has a low weight, does not have a history of hypertension nor uterine irritability is just about to give birth to a baby. We would like to predict if the baby will have a low birth weight. For the mother we have M WL = 1, H TN = 1, U IN = 1. We will classify the mother considering only the attributes

M WL , HTN , and U IN , D. {M WL }, {HTN }, {U IN }, {BWL }, {M WL , HTN }, {M WL , U IN }, {M WL ,BWL }, based on the data in Table 2 only. We will use a Na¨ıve {HTN ,U IN }, {H TN ,BWL }, {M WL , H TN , U IN }, Bayes classifier that assumes independence between {M WL , HTN , BWL }. these attributes. What is the probability that the weight of the baby will be low, i.e., BWL = 1? E. Don’t know. A. 1/5 B. 1/3

M WL M WH HTN HTY U IN U IY BWL BWH m1 m2 m3 m4 m5 m6 m7 m8 m9 m10

1 0 1 1 1 1 1 0 1 0

0 1 0 0 0 0 0 1 0 1

1 1 1 1 1 1 1 1 1 1

0 0 0 0 0 0 0 0 0 0

1 1 0 1 1 0 1 1 1 1

0 0 1 0 0 1 0 0 0 0

0 0 1 1 0 1 1 1 1 0

1 1 0 0 1 0 0 0 0 1

C. 3/5 D. 5/8 E. Don’t know.

Table 2: Ten randomly selected mothers and their properties in terms of mothers weight (MW), history of hypertension (HT), uterine irritability (UI) and birth weight (BW). M WL and M WH denotes the mothers weight is lower than the median value and higher than the median value respectively whereas subscript N denotes no (i.e. attribute value is 0) and Y denotes yes (i.e. attribute value is 1). BWL and BWH denotes a low birth weight baby or a high birth weight baby respectively. 4 of 11

Question 10. Out of the ten mothers in the data in Table 2, m3 , m4 , m6 , m7 , m8 , and m9 gave birth to a baby with low birth weight, i.e., BWL = 1. Based on the distances in Table 3, we will use K-nearest neighbor classification with K = 1 to classify each observation. The performance of the classifier is estimated using leave-one-out cross-validation. Which of the following statements about the leaveone-out crossvalidation error is incorrect?

m1 m2 m3 m4 m5 m6 m7 m8 m9 m10 m1 m2 m3 m4 m5 m6 m7 m8 m9 m10

0 6.1 3.2 3.8 4.8 3.5 3.7 3.7 4.6 4.3

6.1 0 5.4 4.4 4.8 4.8 6.0 5.0 4.2 5.5

3.2 5.4 0 3.5 3.7 4.0 1.4 4.0 3.3 3.4

3.8 4.4 3.5 0 3.2 2.1 4.3 2.4 2.1 4.5

4.8 4.8 3.7 3.2 0 4.1 3.9 4.4 1.5 4.5

3.5 4.8 4.0 2.1 4.1 0 4.8 0.6 3.0 3.9

3.7 6.0 1.4 4.3 3.9 4.8 0 4.8 4.0 4.4

3.7 5.0 4.0 2.4 4.4 0.6 4.8 0 3.3 4.1

4.6 4.2 3.3 2.1 1.5 3.0 4.0 3.3 0 3.8

4.3 5.5 3.4 4.5 4.5 3.9 4.4 4.1 3.8 0

A. Five of the observations will be misclassified. B. m1 , m2 , m3 , m5 and m10 will be misclassified.

Table 3: The Euclidean distance between each of the ten selected mothers based on all the 6 attributes x1 – x6 . Red denotes mothers that gave birth to a baby with low birth weight (see also Table 2.)

C. All the mothers giving birth to babies with high birth weight will be misclassified. D. One of the mothers giving birth to a baby with low birth weight will be misclassified.

Question 9. Table 3 shows the Euclidean distance E. Don’t know. between the ten selected mothers based on all the 6 attributes x1 –x6 in Table 1. We recall that the KNN Question 11. In a very large unpublished study the density and average relative density for the observation following findings were made: x is given by • 60% of mothers that have a history of hypertension 1 −1 X density(x, K) = distance(x, y) give birth to a baby with low birth weight. K y∈N (x,K) • 8% of mothers that do not have a history of hypertension give birth to a baby with low birth density(x, K) , a.r.d.(x, K) = 1 X weight. density(y, K ) • 10% of all mothers have a history of hypertension. K y∈N (x,K)

A mother gives birth to a baby with low birth weight. where N (x, K) is the set of K nearest neighbors of What is the probability the mother has a history of observation x and a.r.d(x, K) is the average relative hypertension according to the unpublished study? density of x using K nearest neighbors. Based on the the data in Table 3, what is the average relative density A. 3 . 50 for observation m2 for K = 2 nearest neighbors? 5 1.5 . B. . A. 11 4.2 6 252 C. . . B. 11 559 3 390 D. . . C. 5 733 D.

43 . 39

E. Don’t know.

E. Don’t know.

5 of 11

ROC curve A

Figure 2: A classifier has given the score t to observations belonging to the two classes ”False” and ”True”.

ROC curve B

Question 12. We will consider a two-class classification problem. A classifier has been trained that gives a score t to each observation. Figure 2 shows the value of the score for each observation, as well as the density of the two classes as a function of the score t estimated using a kernel density estimator. Figure 3 shows the ROC curves for three different classifiers. Which of the three ROC curves corresponds to the classifier that scores the observations according to Figure 2? ROC curve C

A. ROC curve A correspond to the classifier.

B. ROC curve B correspond to the classifier.

C. ROC curve C correspond to the classifier.

D. It is not possible to generate a ROC curve for the classifier since this is a non-linear classification problem.

E. Don’t know. Figure 3: Three Receiver Operator Characteristic (ROC) curves.

6 of 11

Figure 4: A dataset containing four classes.

Question 13. A dataset with two attributes x1 and x2 contains the four classes illustrated in Figure 4. Which of the following statements is correct ?

A. Since the covariance is not the same for the four classes the k-means algorithm is better suited to cluster the data than clustering by the Gaussian Mixture Model (GMM).

B. It is not possible to say anything about the value of x2 from observing x1 for data observations in class 4.

C. x1 and x2 are positively correlated in class 1 and negatively correlated in class 4.

D. The four classes can be better identified as groups using a four component Gaussian Mixture Model (GMM) with full covariance matrices than a four component Gaussian Mixture Model (GMM) with diagonal covariance matrices.

E. Don’t know.

Figure 5: Decision boundaries for three different classifiers. The decision boundaries indicated by white, light gray, dark gray and black are for classifying the green triangle, blue dot, orange circle and red square classes respectively.

7 of 11

Question 14. Three different classifiers are trained on all the available data in Figure 4 using only x1 and x2 as attributes. The data as well as the decision boundaries of each of the three classifiers are given in Figure 5. Which of the following statements is correct?

A. Classifier A is given by a multinomial regression, classifier B a neural network with 10 hidden units and classifier C a decision tree. B. Classifier A is a 1-nearest neighbor classifier, classifier B a neural network with 10 hidden units and classifier C a multinomial regression. Figure 6: The confusion matrix for classifier B. C. Classifier A is a decision tree classifier, classifier B a multinomial regression and classifier C a neural Question 16. Out of the three classifiers in Figure 5, network with 10 hidden units. it turns out that classifier B is the worst performing D. Classifier A is a decision tree classifier, classifier classifier. In Figure 6 we inspect the confusion matrix of this classifier. Which of the following statements is B a multinomial regression and classifier C a 1incorrect ? nearest neighbor classifier. A. The error rate of classifier B is 66/2000. E. Don’t know. B. Classifier B is performing significantly better than random guessing. Question 15. We would like to combine classifiers in order to improve the classification performance. Which one of the following statements is correct? A. Combining classifiers will in general not improve the classification performance if their errors are independent. B. In Boosting the data set is sampled with replacement from a uniform distribution in each Boosting round.

C. The main confusion of classifier B is given by observations in class 4 being classified as class 1. D. The classification problem has class-imbalance issues that should be addressed. E. Don’t know. Question 17. Which of the following statements about decision trees is incorrect ? A. The decision rules are quite easy to interpret.

C. In Bagging the dataset is sampled without replacement from a uniform distribution. D. The main difference between Bagging and Boosting is the distribution from which the data is sampled. E. Don’t know.

B. Decision trees can be constructed for data with many attributes. C. Overfitting may occur. D. Decision trees are particularly suited for continuous attributes. E. Don’t know.

8 of 11

Article

Soprano

Movie

Music

Program

Opera

Actor

Orchestra

Picture

#1 #2 #3 #4 #5

0 0 1 0 1

0 1 0 1 0

1 0 1 0 1

0 0 0 0 1

1 0 1 0 0

0 1 0 1 0

1 0 1 0 1

0 1 0 0 0

Question 20. The following proposed clustering procedure (step 1 to 3 below) for the five articles in Table 4 contains an error: 1) The articles are clustered using k-means with k = 2. 2) The simple matching coefficient (SMC) is used as similarity measure. 3) The centroids are computed as the component-wise mean.

Which statement about the erroneous clustering proTable 4: The occurrence of eight keywords (attributes) cedure is correct ? in five news articles (observations). For each article, an attribute is equal to “1” if the keyword occurs and A. The simple matching coefficient is not suitable for binary attributes. equal to “0” otherwise. In red is given observations that deal with “ballet and opera” and in black obser- B. It must be defined how to compute the simple vations that deals with “motion pictures”. matching coefficient between the binary data objects and the continuous centroids. Question 18. The data in Table 4 shows whether or C. The k-means algorithm can not be applied to not eight keywords occur in five news articles (i.e., the binary data. data has eight attributes and five observations). Which statement about the data is incorrect? D. The k-means algorithm will not work when there A. Each of the attributes in the data has standard deviation equal to 1.

are more attributes than data objects. E. Don’t know.

B. The maximal simple matching coefficient between any two articles is equal to 7/8. C. For “Soprano” and “Program” the median and the mode are identical. D. The Jaccard coefficient between #1 and #3 is equal to 3/4. E. Don’t know. Question 19. What is the empirical covariance between the two attributes “Opera” and “Orchestra” in the data in Table 4? A. 0.8. B. 0.5. C. 0.3. D. 0.2. E. Don’t know.

9 of 11

Consider using a decision tree to classify news articles as belonging to either of these two groups. If you use Gini index as impurity measure, what would the purity gain be if the attribute “Soprano” is used in the first split? A. ∆ ≈ 0.27. B. ∆ ≈ 0.21. C. ∆ = 25 . D. ∆ = 51 . E. Don’t know. Question 23. Consider a decision tree optimized to classify the news articles in Table 4 into the two groups described in Question 22. The decision tree is optimized with Hunt’s algorithm using the Gini index as impurity measure, and no pruning is performed. Which of the following statements is correct ? A. The optimal decision tree contains four splits.

Figure 7: Four possible hierarchical clusterings of the five articles in Table 4

B. Neither of the attributes “Soprano”, “Program”, “Opera”, or “Picture” is used in a test condition in the optimal decision tree. C. The test condi...

Similar Free PDFs

Exam 19 December 2011, questions

- 11 Pages

Exam December 2017, questions

- 7 Pages

Exam December 2010, questions

- 14 Pages

Exam 1 December, questions

- 16 Pages

Exam December, questions

- 1 Pages

Exam 12 December, questions

- 5 Pages

Exam 2011, questions

- 22 Pages

Exam 2011, questions

- 14 Pages

Exam 2011, questions

- 3 Pages

Exam June 2011, questions

- 10 Pages

Exam 2011, questions

- 16 Pages

Exam 6 December 2015, questions

- 8 Pages

Exam 17 December 2019, questions

- 41 Pages

Exam 10 December 2013, questions

- 4 Pages

Exam 2011, questions

- 3 Pages

Popular Institutions

- Tinajero National High School - Annex

- Politeknik Caltex Riau

- Yokohama City University

- SGT University

- University of Al-Qadisiyah

- Divine Word College of Vigan

- Techniek College Rotterdam

- Universidade de Santiago

- Universiti Teknologi MARA Cawangan Johor Kampus Pasir Gudang

- Poltekkes Kemenkes Yogyakarta

- Baguio City National High School

- Colegio san marcos

- preparatoria uno

- Centro de Bachillerato Tecnológico Industrial y de Servicios No. 107

- Dalian Maritime University

- Quang Trung Secondary School

- Colegio Tecnológico en Informática

- Corporación Regional de Educación Superior

- Grupo CEDVA

- Dar Al Uloom University

- Centro de Estudios Preuniversitarios de la Universidad Nacional de Ingeniería

- 上智大学

- Aakash International School, Nuna Majara

- San Felipe Neri Catholic School

- Kang Chiao International School - New Taipei City

- Misamis Occidental National High School

- Institución Educativa Escuela Normal Juan Ladrilleros

- Kolehiyo ng Pantukan

- Batanes State College

- Instituto Continental

- Sekolah Menengah Kejuruan Kesehatan Kaltara (Tarakan)

- Colegio de La Inmaculada Concepcion - Cebu